Why This Matters Right Now

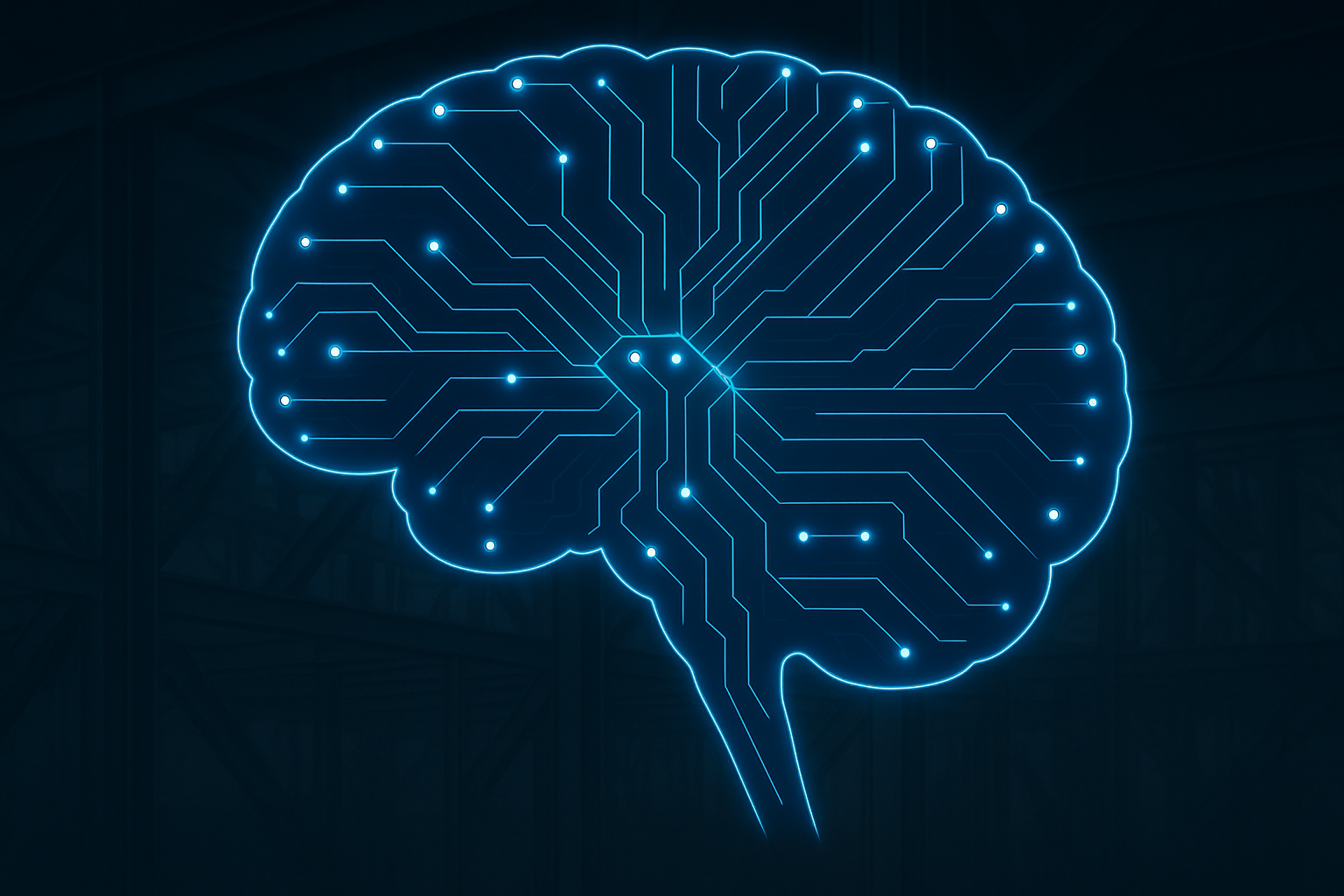

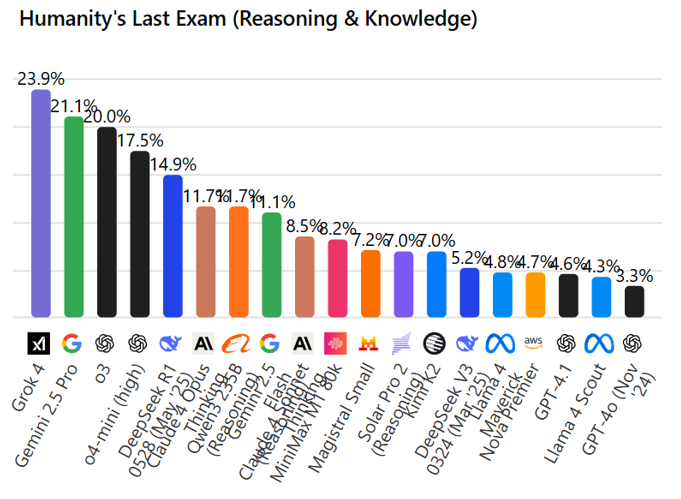

LLMs have sprinted from novelty chatbots to board‑level agenda items in under two years. New releases like GPT‑4.5, Claude 4, and Llama 4 offer million‑token context windows and benchmark‑leading performance—enough juice to read full maintenance manuals, draft dynamicpricing memos, or generate code for a plant‑floor integration overnight.

What Exactly Is an LLM?

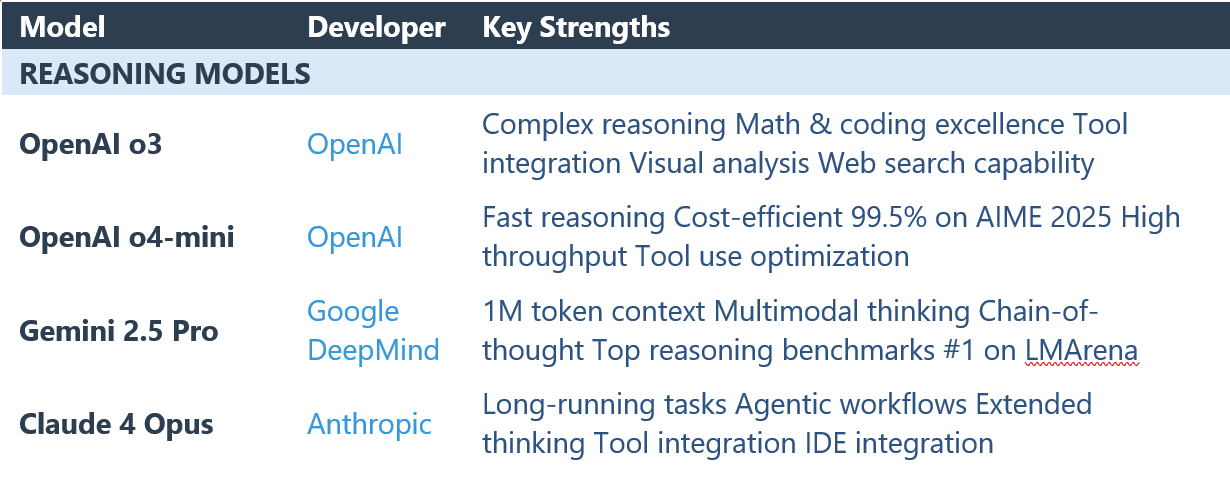

Think of an LLM as an industrial‑grade autocomplete onsteroids. It studies trillions of words and code snippets, then predicts whatcomes next—whether that’s finishing a sentence, writing a contract clause, oranswering a customer‑service email. Under the hood, it’s just matrix mathrunning on GPU clusters, but the practical upshot is a system that can read,reason, and write at near‑expert levels.

How It Works (Sans Jargon)

LLMs use a neural network architecture called transformers,which process language by predicting the next word in a sequence based oncontext.

For example, when you see:

“That’s one small step for man, one giant leap for________”

…your brain instantly fills in “mankind.” That’sexactly what an LLM does — it takes your input and, using context, predicts themost likely next word. But instead of drawing on personal memory, it draws onbillions of examples it encountered during training.

It’s similar to how you instinctively know that:

4 × 4 = 16

You don’t need to calculate it — you’ve seen it so manytimes that it feels automatic. LLMs operate the same way. They've been exposedto language patterns over and over, so they can complete sentences or answerquestions almost instantly.